I’d like to “delve” into how AI is “fostering” changes in writing

Research about pre- and post-ChatGPT changes in vocabulary and sentence structure

A personal note: I’d appreciate if you would subscribe and like this post! Thank you!

Certain words now flash in my head with a red-light warning: AI wrote this! My students and I joke quite often that the words delve and leverage seem AI-written. Before ChatGPT, I rarely encountered them. Post-ChatGPT, “delve” is all over the internet. I overhear people using the word in casual conversation. Honestly, I’m not sure exactly why these particular words became the vocabulary of AI writing in my head.

I think it started with the inordinate flexibility of these words. “Leverage” has always struck me as a sort of nasty venture capitalist word, related to private equity firms that buy companies through leverage. “Leverage” describes any activity but with a veil that softens the blow. It can mean a lot of things to a lot of people. It’s vague. As I’ve written before, AI writing forces the reader to acquiesce that the sentence is probably, mostly, fairly, somewhat correct. Approximately correct.

But now there is actual evidence behind my jokes. In this paper from researchers in Finland, “How Large Language Models Are Changing MOOC Essay Answers: A Comparison of Pre- and Post-LLM Responses,”[i] the authors studied changes in student writing, per- and post-ChatGPT. Specifically, they studied an online class about AI ethics. They write, “our data consisted of a total of 56,878 English-language essay submissions. The earliest submission included in the dataset was from November 2020, and the latest from October 2024” (p. 3). It’s a fantastic study with reliable data (I’ve become enamored with developing baselines of pre-ChatGPT writing). The methods are exemplary in my view.

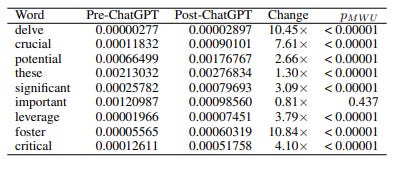

Their results are striking to me. The following words spiked in usage:

Table 1: “Prevalence of key stylistic terms before and at least one year after the release of ChatGPT. ‘Change’ indicates the magnitude of change (e.g., the relative usage of the term ‘delve’ increased 10.45-fold). pMW U is the p-value from a Mann-Whitney U test” (Leppänen et al., 2025, p. 6). Table is from the article.

The word “foster” saw a 10.8X increase post-ChatGPT! “Delve” is used 10.5X more often! As I noted above, now I read these words everywhere in online content.

There are some other findings that elaborate on changes to the writing in the study. Here are four structural changes the authors identify:

Figure 1: “Key text statistics. Line indicates mean, and the shaded area indicates the first and third quartiles of the data. Data in monthly bins. The first vertical line indicates the release of ChatGPT, and the second vertical line indicates one year after the release” (Leppänen et al., 2025, p. 5). Figure is from the article.

Let me explain (not delve into, mind you) what these stats mean. The first is token count (a), which is a proxy for the number of words. The second is sentence length (b). Third is a standard score for reading level (c). And the fourth is type-token ratio, which measures the diversity of vocabulary. I’ve used type-toke ratio before in a publication. Like all measures, it’s super interesting but requires a lot of interpretation.

In sum: post-ChatGPT, student answers got longer in terms of raw number of words and sentence length. Both went up substantially. The reading level increased in complexity (Flesch reading easy) but not by much, especially considering the variance (the blue shaded area). The type-token ratio (or TTR) went down. Basically, despite written answers getting longer and more complex post-ChatGPT, the answers use a narrower range of vocabulary.

Here is an interpretation of TTR finding. It generally implies that answers are less variable. This might signal that students are getting better at finding the answer, at least in the sense that they might be writing the same sort of response (I can’t find if the answers were more or less correct post-ChatGPT). In any case, the decrease in TTR indicates a narrowing of the variety of words.

The most obvious explanation is the introduction of ChatGPT. What’s not clear to me is why these things are happening. I can’t really put my finger on the sentence structure findings. But let me explore what might be happening with the vocabulary issue.

AI words are adjacent to analysis

GenAI’s vocabulary, such as the words delve, leverage, and crucial (see Table 1), is adjacent to analysis, at least in terms of the training data. These words indicate analysis is nearby but these words aren’t analysis themselves. For example, writers often “delve in the findings” followed by a concrete discussion of the actual findings. But AI writing doesn’t have any findings; so it looks for average words close to the analysis (that is doesn’t “understand”). And how do machines get average words? They identify the common words while ignoring the more idiosyncratic words. As a result, AI chatbots strip away the actual analysis, leaving only words that indicate analysis is nearby in the paragraph.

Conclusion: AI writing is already in the water

If we take the findings just in terms of writing in general, and not related to MOOCs, we can make two suppositions. First, if writers are using GenAI, then it is having a clear effect on the writing. The writing that these GenAI chatbots produce is longer and more complex sentence-wise but more simplistic in terms of vocabulary. Second, even if the writers in the study aren’t using GenAI, the milieu of AI chatbots is already having an effect. I find this fascinating and a bit troubling. The authors alluded to this in their discussion:

“An open question, however, is to what extent the use of LLMs has already influenced language use, making what we consider “LLM-indicator” terms equivalently common in truly human-produced language. This could happen, e.g., where students extensively use LLMs as an aid while learning English, and thus pick up the idiosyncrasies of LLMs to use in their own writing as well” (p. 7).

Even if we want to avoid AI chatbots, they’re already having effects. They’re already “in the water” so to speak.

References

[i] Leppänen, L., Aunimo, L., Hellas, A., Nurminen, J. K., & Mannila, L. (2025). How Large Language Models Are Changing MOOC Essay Answers: A Comparison of Pre- and Post-LLM Responses (No. arXiv:2504.13038). arXiv. https://doi.org/10.48550/arXiv.2504.13038

shared with colleagues in the English department. Other words have been jumping out this semester and last, such as the word "profound." I teach at a community college where the word profound was _never_ used by my students before. For one thing, we teach students to reject the use of subjective language in literary analysis (like "awesome" and "great"). But the word "profound" suggests a degree of knowledge and analytical expertise that privileges it as an adjective over these other words, so it didn't jump out to me at first. Until I remembered: my students have no idea what is profound and what isn't. They don't have the experience as readers, and so the word choice is completely inauthentic. It's driving me crazy, and I have to think about how to teach them to use it. This is an imperative coming from the top, and I can't stand it -- I feel like a hostage to big tech.

You may also be interested in our study of ChatGPT's and Llama's grammatical and rhetorical structure, which varies from human writing just as strongly as its vocabulary does: https://arxiv.org/abs/2410.16107

Our suspicion is that this is driven by the instruction-tuning process. The Llama base models, which do pure text completion, are pretty similar to humans. The instruction-tuned variants with post-processing to follow instructions and complete tasks are quite different from humans. Possibly there's something about the instruction tuning tasks and the feedback of the human raters that makes this happen.